JavaScript is used by 95% of the 1.74 billion websites on the internet. Despite its popularity, the majority of websites lack the proper knowledge of JavaScript SEO best practices. Read how improper use of Javascript can impact your site search result rankings.

How Google processes Javascript

To understand how to improve JavaScript SEO practices, let’s first take a look at how Google processes JavaScript in websites.

For traditional websites, using only HTML and CSS, the crawling is fairly simple. Googlebot downloads an HTML file. It extracts all the links from the source code and downloads any CSS files. It then downloads all the resources to its indexer and indexes them.

For JavaScript-based websites, things get a little more complicated:

- Googlebot downloads the HTML file as previously.

- This time, however, the links are not found in the source code as they are generated only after executing the JavaScript.

- Googlebot downloads both CSS and JavaScript files.

- GoogleBot now needs to use Google Web Rendering service to execute JavaScript and fetches any data from external APIs and databases.

- It is at this point that the indexer can index the content and identify new links and add them to Googlebot’s crawling queue.

As you be seen the process has more steps than a traditional HTML Page. There are a number of issues that can occur when rendering and indexing JavaScript-based websites.

I will be dealing with these issues in more detail in the next sections.

Coding practices that impact how Googlebot interprets your site

Having a nice user-friendly site does not mean that the site is SEO friendly. In this section we cover client-side coding practices that will generate issues as Googlebot is parsing your site.

1. Lazy-loaded content (Infinite scrolling)

In one of his talks, Martin Splitt went into the details of Google not able to parse pages that implement infinity scrolling.

When a user lands on a page with infinite scrolling, a portion of the page will be shown. When the user scrolls down new content will be loaded from the backend and presented to the user.

Googlebot does not know how to scroll pages. When Googlebot lands on a page, it crawls only what is immediately visible. This will result in a portion of data missing from Google’s search index.

Martin Splitt recommends only lazy loading images and videos and using Intersection Observer API to asynchronously observe any changes in the HTML elements in the page’s viewport.

An alternative, recommended by Google’s official documentation, states that lazy loading is not an option, to use paginated loading with infinite scrolling. Paginated loading allows Google to link to a specific place in the content instead of only the top visible part of the content.

2. Dynamic Content

Rendering is the process of taking the content and displaying it to the user and crawler. There are two types of rendering: server-side rendering and client-side rendering.

In the case of client-side rendering, the server will need to send the templates and Javascript files to the client, the JavaScript then retrieves the data from the backend and fills the templates as the data appears. Since the content is not all immediately displayed, content that requires Javascript to be fetched will not be indexed.

Google recommends Dynamic rendering when your site is very heavy on Javascript and content changes rapidly. The process requires the server-side to detect the type of user agent and to send the user agent content that is pre-rendered and that is specific to the user agent. You can optimize your site to send Googlebot a pre-rendered page and serve it a static HTML version.

This approach is supported by Google and there are services you can use like Puppeteer to implement this. This method is highly recommended for JavaScript generated content that changes rapidly and JavaScript features that aren’t supported by crawlers.

Test how Google sees the page

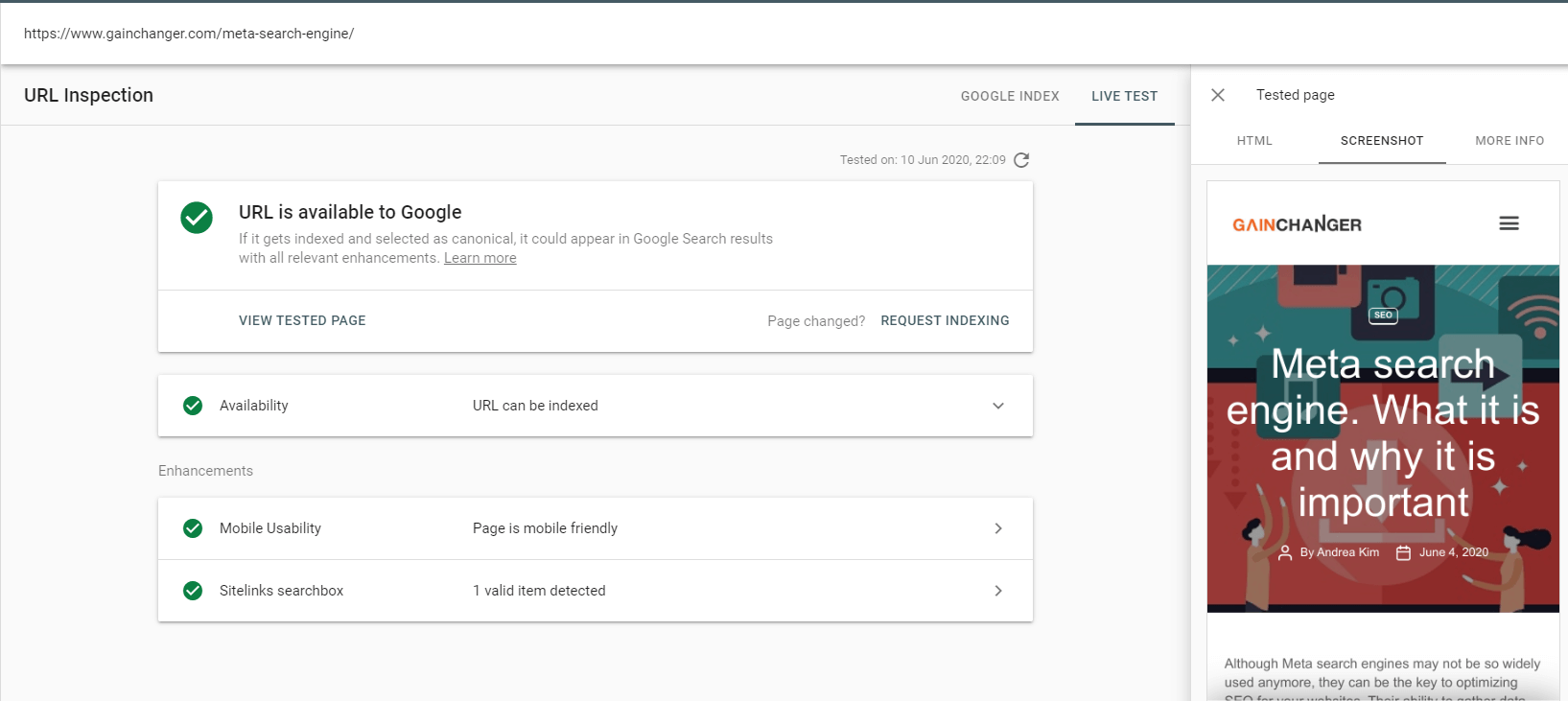

If your website has JavaScript, then it’s important to make sure your website content can be rendered properly. One way of doing this is to use the Google URL Inspection Tool.

With the URL inspection feature, you can render a page as Googlebot sees it. You can also check out the HTML tab which allows you to see the DOM (the code after your page is rendered).

If your page is not fully visible or content is not present you have an issue of how GoogleBot is interpreting the content of your site.

Googlebot may decide to block some of your content for a number of reasons. Google optimizes its crawlers to only download relevant resources. Content blocking may happen in these scenarios:

- Web Rendering Service algorithm decided it’s not necessary for rendering.

- The scripts take too long to execute (timeouts).

- If the content needs crawler to click, scroll or complete any other action to appear, it won’t be indexed.

A common mistake that web developers do, is including a ‘no-index’ tag inside HTML or adding a disallow Javascript entry inside robots.txt. When Googlebot crawls for the first wave to check the HTML markup, it will decide not to crawl the second time around for the Javascript content.

Performance and Usability Issues caused by Javascript

Google announced that Core Web Vitals will be used as a metric in search ranking. In this section we will take a look at how these bad practices in Javascript SEO can really affect your performance with crawling, indexing and usability:

1. Render Blocking

When the browser encounters an external script it has to download it and execute it before it can continue to parse the HTML. This may incur a round trip to the server which will block the rendering of the Page. Non-critical Javascript should be made asynchronous and deferred.

2. Javascript not minified

Minifying JavaScript is a technique used to decrease the processing time needed to parse, compile and execute your JavaScript code. Not doing this can greatly reduce the performance for crawlers and may choose to timeout or skip rendering.

3. Multiple Javascript Files

Each Javascript file will require a request to the server to download the file. The fewer Javascript files there are the fewer round trips to the server. Make sure that you remove any unused Javascript files.

4. Minimize work on the main thread

By default, the browsers run all scripts in a single thread called the main thread. The main thread is where the browser processes user events and paints.

This means that long-running tasks will block the main thread giving way to unresponsive pages. The less work required on the main thread the better.

Make use of service workers to prevent functionality running directly on the main thread for better performance. This will help the crawler avoid timing out when requesting data for rendering.

5. Long jobs

A user may notice your UI to be unresponsive if any function blocks the main thread for more than 50ms. These types of functions are called Long jobs. Most of the time, it is due to loading too much data than the user needs at the time.

To resolve this, try to think about breaking your jobs into smaller asynchronous tasks. This should improve your Time To interactive (TIT) and First Input Delay (FID)

6. Client-Side rendering

Javascript Frameworks like React, Vue and Angular make it very easy to build a single page application with minimal interaction with the backend, unless new data is required.

Such an approach improves the usability and response of the page as the user does not need to wait for the Postbacks each time user accesses a new page.

This is good as long as Javascript will not take too much time to execute. If the volume of Javascript is large, the browser will take time to download the file and execute it introducing delays to interact with user actions.

When building sites that are heavy on client-side rendering, consideration should be given to limiting the size of critical Javscript that will be needed to render the Page.

Google also suggests the approach of using server-side rendering and utilizing virtual-DOM and use the client-side Javascript to re-hydrate (deserialize) virtual DOM into real DOM elements. The concept of hydration is supported by major frameworks like Vue and React.

7. Use of Javascript for Navigation

It is critical that the information that is important for Google to index is created within your HTML markup. Links are also essential to add inside your HTML using the anchor tags. Google advises refraining from using other elements and Javascript event handlers for your linking strategies.

Don’t be afraid of Vanilla Javascript

Vanilla JavaScript is Javascript without any additional libraries. Javascript is now a very powerful language, and complex functionality can be implemented without the need to use libraries like JQuery.

You can accomplish a lot with plain old Javascript. Don’t be lazy.

Final thoughts & considerations

To stay ahead of the game, implementing the right JavaScript SEO practices is important to enabling search engine crawlers to properly render and index your pages. Javascript-based rendering requires further processing from the crawler and it is not done in the initial crawling phase.

So, it is essential to apply the following techniques to make sure you do not suffer from being skipped by the crawler!

At Gainchanger we automate the tedious part of SEO to allow you to scale your results exponentially and focus on what really matters.

Get in touch for a free 5-minute consultation or to start scaling your strategies today.